Intro

About me

🚀 Hi there, I’m Beren!

I’m a Lead Software Engineer passionate about creating intuitive, AI-powered experiences. Currently, I lead a multicultural team of five engineers at Arbrea Labs AG in Zurich, Switzerland, where we build cutting-edge iOS apps leveraging Augmented Reality (AR) and Artificial Intelligence (AI). Our mission? To revolutionize the field of aesthetic medicine by helping surgeons and patients visualize potential plastic surgery outcomes.

🎓 From Ph.D. Research to Real-World Impact

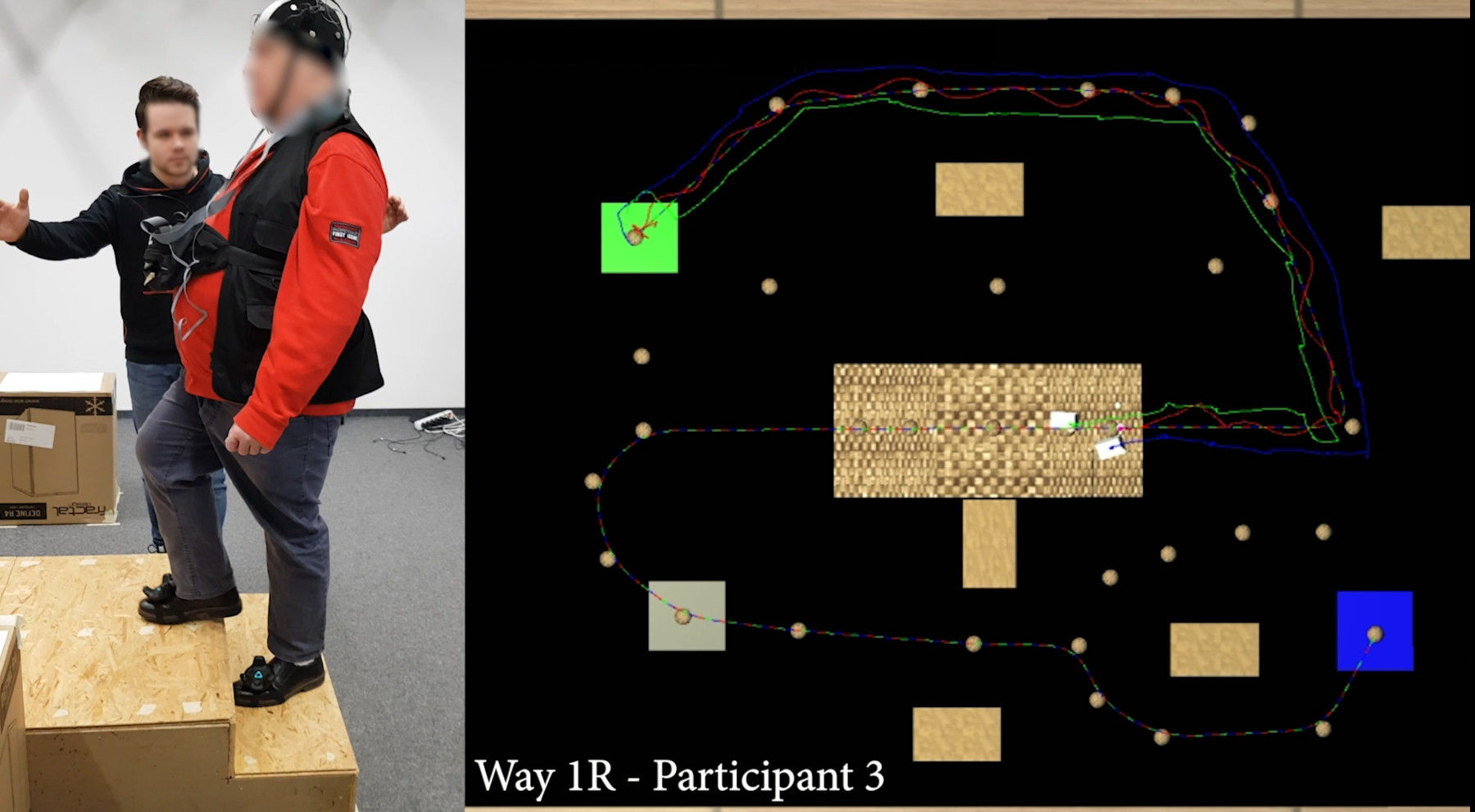

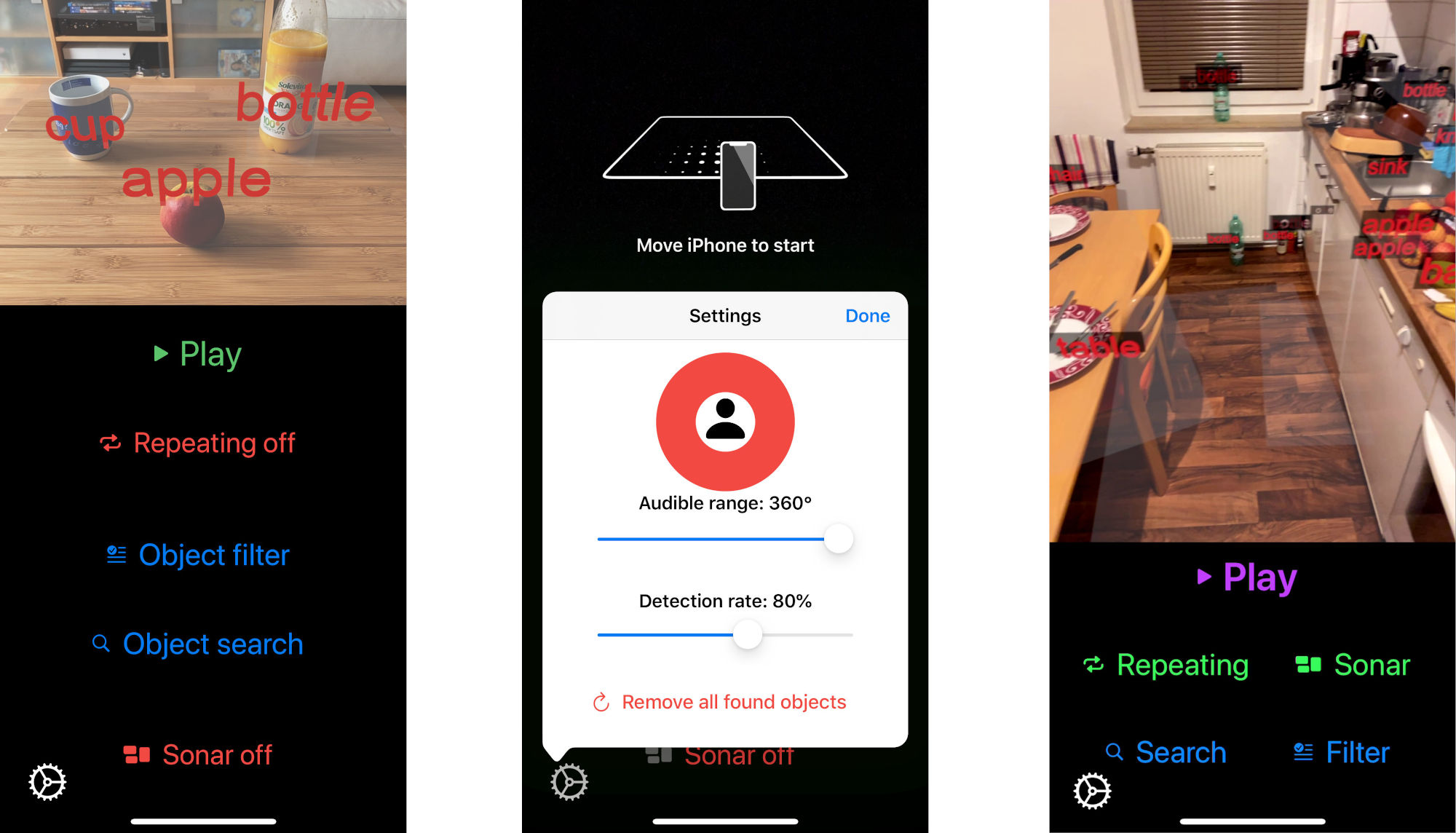

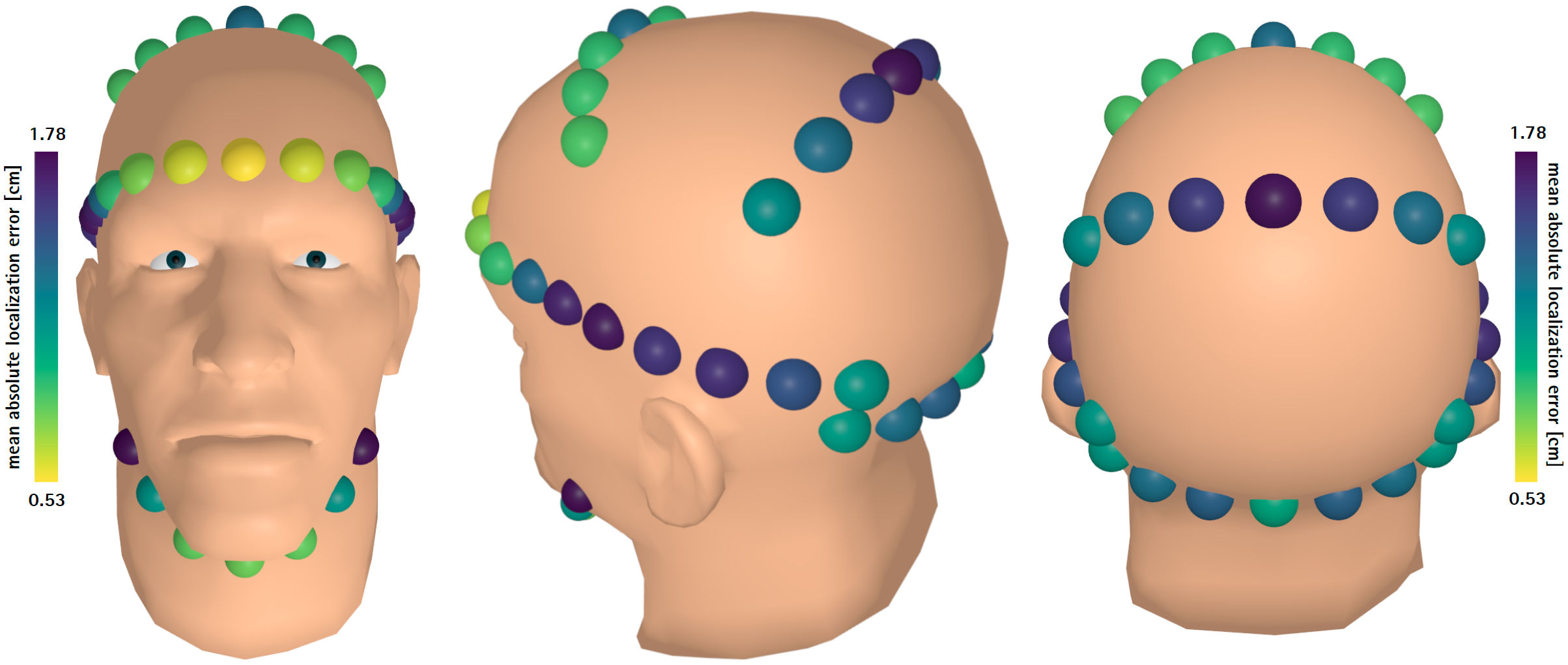

Before moving to Switzerland in 2021, I completed my Ph.D. in Human-Computer Interaction at the University of Hannover, where I explored tactile feedback in AR/VR. My main research project, HapticHead, introduced a 24-actuator vibrotactile display that enhances immersion in virtual environments—whether it’s guiding visually impaired users or simulating the sensation of raindrops in VR.

Beyond my core research, I delved into AI applications, including:

- AhemPreventor – An AI-powered tool that detects filler words in speech and provides real-time, discreet tactile feedback to improve public speaking.

- Concrete Damage Detection – Using Deep Learning to assess structural damage via ultrasonic signals in collaboration with material scientists.

🎤 Tackling Stage Fright with AI

One of my passion projects is Stage Fright Buddy, an AI-driven personalized training app for public speaking. Built with Swift 6 and SwiftUI, it helps users overcome performance anxiety through voice-based chat, curated challenges, and adaptive feedback, continuously optimized with A/B testing and behavioral analytics.

📱 Building Exceptional Mobile Experiences

With over 10 years of experience in iOS and Android development, I’ve helped companies scale their mobile products. Before Arbrea, I developed and maintained several event management apps for miovent.de, a subsidiary of Deutsche Messe AG.

🔍 What’s Next?

I thrive in innovative, fast-paced environments where I can combine AI, HCI, and mobile development to build next-gen applications. If you’re looking for a pragmatic Senior Software Engineer or Tech Lead with a passion for creating intelligent, user-friendly software, let’s connect!

Skills

Leadership skillsI currently lead a team of five Software Engineers for Arbrea Labs AG, previously gave lectures to up to 600 CS students in various topics, and supervised dozens of Bachelor/Master theses during my Ph. D. and for Arbrea Labs AG in collaboration with ETH.

Mobile App DevelopmentMy side hustle during my studies (mostly Android) and current job (iOS). I like polishing the UX of apps through gamification and animations so that they feel fun and engaging to use.

Programming languagesPreference for newer languages: Swift, Kotlin & Python while still knowing how basic C and pointers work. I'm not lost without ChatGPT 😃

Artificial IntelligenceI trained neural networks and deployed several mobile apps with AI.

Data analysis and visualizationI conducted dozens of user studies during my Ph. D. and analyzed data with statistical methods.

Social skillsEmpathy, pragmatism, traveling, and cultural appreciation. Others appreciate my smiling, positive attitude and pragmatic can-do character.